The centerpiece of today’s post is an extensive interview with Chuck Thorpe. Thorpe, now President of Clarkson University, spent over two decades at Carnegie Mellon University. These years were largely spent as a student, project manager, and PI working on Carnegie Mellon’s autonomous vehicle vision research. The primary goal of the interview was to better understand how he and others managed systems contracts at CMU — CMU had a strong comparative advantage in this style of work while Thorpe was there. Throughout the interview, he goes on to paint a broader picture of how the CMU computer science department functioned differently than others at the time.

As a preface to the interview, I wrote the following ‘Introduction’ section to highlight why CMU is a singularly important department in autonomous vehicle history. I leave the reader with three options as to how to consume the rest of the piece after reading the introduction. For the bookworms, I have provided an abridged transcript of my meeting with Chuck Thorpe — which picks up after the introduction. For the audiophiles, the full audio of the interview is available — both on Substack and on Spotify. And for the YouTube afficionados, I share a YouTube link of the Zoom interview.

Introduction

The first piece in the all-time great DARPA contractors trilogy explored the importance of BBN’s unique structure, embodying the “middle ground between academia and the commercial world.” BBN did so well in this role that it earned itself the monicker “Third Great University of Cambridge.” BBN, as a firm, demonstrated what was possible if a firm staffed itself with the very best engineering researchers and cared deeply about technical novelty more than profit maximizing. The flipside of the coin is CMU and its AI work in the later-1900s. Much of CMU’s AI work in this period answers the question, “What if an academic engineering department made its comparative advantage novel systems contracts?” CMU was an academic department eagerly embracing a style of work more common to the defense primes in the DARPA ecosystem.

In DARPA’s Autonomous Land Vehicle project — covered in the ALV section of the ARPA playbook — CMU stood out as the lone contractor involved who was excited to take on both the burden of novelty and the burden of systems integration work. The academics and the project’s main commercial firm had very different incentives when it came to these issues. As I wrote in the earlier ALV piece:

The contractors who found themselves working on the reasoning system vs. the vision system had very different interests and incentives. Most of the reasoning contractors were commercial firms and had the incentive to fight for a broader scope of work and more tasks getting assigned to the reasoning system because that meant better financial returns to the company. Most of the vision contractors, such as the University of Maryland, were academics and largely content to let that happen…if it meant they got to focus primarily on specific vision sub-tasks that were more in line with the size and scope of projects academics often undertook. For example, the private companies felt that building up much of the systems mapping capabilities, knowledge base, and systems-level work should fall to them.

CMU and one of its project leaders — Chuck Thorpe — seemed to be the strong voice of dissent on many of these matters from the academic community. Ever since CMU had become a major contractor in DARPA’s Image Understanding work, it had taken a rather systems-level approach to attacking vision problems — working with civil and mechanical engineering professors like Red Whittaker to implement their vision work in robotic vehicles — rather than primarily focusing on the component level. The CMU team far preferred this approach to things like paper studies — in spite of the increased difficulty.

As the ALV project wore on, Martin Marietta — the lead systems contractor for the ALV — gradually succumbed to its ‘demo or die’ pressure. As the frequency of demos increased, Martin became much more concerned with technically hitting the benchmarks DARPA specified than pushing forward truly ambitious technological changes. Additionally, many of the academics focusing on vision research concerned themselves more with using their DARPA research funds to improve their performance in somewhat simplified environments that helped them write research papers than to do research that would directly plug into the ALV. With this equilibrium becoming clear, Ronald Ohlander — a former student of Raj Reddy at CMU and the DARPA PM responsible for funding vision research — designated CMU as the systems integrator for his DARPA SCVision portfolio. The Martin Marietta team clearly did not care enough about integrating and testing cutting-edge algorithms. So, he tapped CMU and gave them the funds to properly integrate insights from the research ecosystem.

In the prior piece, I described the situation as follows:

CMU had already, unlike most of the component contractors in the SCVision portfolio, been testing its component technology on its own autonomous vehicle: the Terregator. CMU’s used the Terregator — for often slow and halting runs — to test the algorithms developed for its road-following work. The Terregator proved extremely useful to this research, but its use had also helped the CMU team learn enough to outgrow the machine.

In CMU’s ongoing efforts to implement sensor information from a video camera, a laser scanner, and sonar into one workable vehicle, the CMU researchers had come to realize that they needed funding for a new vehicle. CMU needed a vehicle that could not just accommodate the growing amount of sensor equipment, but also one that could carry out its tests with computers and grad students either on board or able to travel alongside the vehicle. This would allow bugs to be fixed and iterations between ideas to happen much faster. The vehicle that the CMU computer vision and robotics community was casting about trying to get funding for would come to be known as the NavLab. Fortunately, what they were seeking money for seemed to be exactly what Ohlander felt the SCVision portfolio needed. The funding for the NavLab began around early 1986. The funding set aside for the first two vehicles was $1.2 million, and it was estimated that any additional NavLab vehicles would cost around $265,000.

As Martin was somewhat frantically taken up with its demo schedule, CMU, with its longer time windows, was free to focus on untested technology as well as technology that ran quite slowly but seemed promising in terms of building more accurate models of its environment. As the CMU people saw it, if anything proved promising they could upgrade the computing machine used to operate that piece of the system later on…the vision researchers likely figured that a piece of hardware powerful enough would likely exist soon to help push the idea along — even if not in the next year. Lacking the hard metrics of yearly demos with speed requirements loosely matching how fast the vehicle would need to go in the field, the CMU team could afford to think more along the lines of “how do we build a machine that is as accurate as possible, even if it moves really slowly today?”

As CMU took up the mantle of building SCVision’s true test bed vehicles, it also took over the role of SCVision portfolio’s true “integrator.”

Things continued like this for a while. Then, after a successful ALV demo in 1987, Martin’s role as the prime DARPA autonomous vehicle contractor suddenly ended. This abrupt shift happened after a panel of DARPA officials and technology base researchers met to discuss phase two of the program. Roland and Shiman wrote in their book on this era of DARPA computing:

Takeo Kanade of CMU, while lauding Martin’s efforts, criticized the program as “too much demo-driven.” The demonstration requirements were independent of the actual state-of-the-art in the technology base, he argued. “Instead of integrating the technologies developed in the SC tech base, a large portion of Martin Marietta’s effort is spent ‘shopping’ for existing techniques which can be put together just for the sake of a demonstration.” Based on the recommendations of the panel, DARPA quietly abandoned the milestones and ended the ALV’s development program.

The CMU researchers and the NavLab — which were remarkably cheap compared to Martin Marietta — continued to be funded. In this way, CMU took its place as DARPA’s top systems integration contractor for driverless vehicles. And it would stay on top of the heap for several decades.

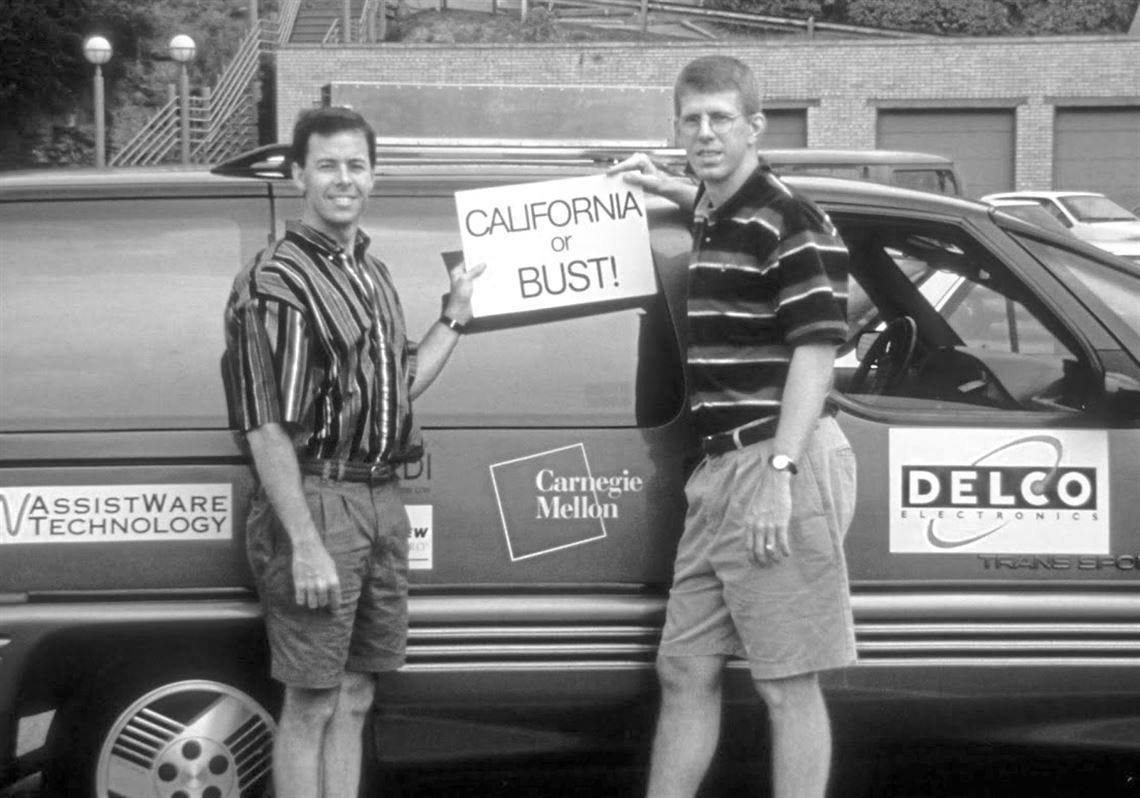

The CMU driverless vehicles were responsible for many — some might even say most — of the practical driverless vehicle breakthroughs in the field's early years. Much of CMU's earliest AI work on these vehicles revolved around the use of things like radar and laser sensors, path planning, and finding ways to integrate inputs from different models into a single set of instructions. But, in the late 1980s, CMU's work abruptly became quite recognizable to the modern eye. In 1988, then-CMU grad student Dean Pomerleau successfully integrated the first neural net-based steering system into a vehicle — his ALVINN system. In 1995, Dean and fellow grad student Todd Jochem drove the successor to Dean’s ALVINN — the more complex RALPH — across the country on their “No Hands Across America” tour. The RALPH system employed neural nets but also put much more effort into model building and sensor processing. Holding Dean and Todd, the upgraded NavLab was able to autonomously drive 98.5% of the way on its successful cross-country journey. In a sense, much of CMU’s work culminated in the CMU vehicles' performances in the second DARPA Grand Challenge, held in 2005. Many only remember that Stanford's team won this landmark challenge. But CMU's two vehicles finished a close second and third to Stanford’s vehicle.

At the helm of Stanford’s team was Sebastian Thrun. Thrun had, the year before, left his CMU research position — which he had held for almost ten years — before joining Stanford and leading their team. In the early years of the field, an astonishing proportion of the best researchers were either trained at CMU or were long-time professors there. In a 2010 oral history, Thorpe described Sebastian Thrun and many of the early Google driverless car team’s CMU ties, saying:

Now, it’s the Google group, and we’re very grateful to Google, but we think of it as the Carnegie Mellon West group. Sebastian, of course, used to be here, and then went to Stanford and Google. Chris [Urmson] is a Carnegie Mellon person on leave. James Kuffner is a Carnegie Mellon faculty member on leave. If you go down through the list, they – everybody except for one on that group either is a Carnegie Mellon person or has a Carnegie Mellon heritage, so that’s our boys. [laughs] And we’re delighted that they’re off with Google, and Google’s given them the researchers to do really cool stuff.

Carnegie Mellon has its fingerprints all over the breakthroughs and researchers from the early decades of autonomous vehicles. It would be hard to argue that CMU’s efforts didn’t move forward the timelines of this field by some number of years. It also seems clear, looking at the history, that the incentives and management structures CMU used to manage projects like this played a major role in setting the university apart.

The university clearly had a comparative advantage in this style of work. So, I decided to ask Chuck Thorpe some questions about how exactly CMU did what it did.

Chuck Thorpe Interview

In the following transcript, I made some cuts to make reading the text version of the interview more efficient. The audio and video files contain the interview in its entirety — which is about 15% longer.

Finding his way to CMU and the early years of the Robotics Institute

Thorpe opened the interview by detailing how exactly he found his way to CMU as a grad student and how CMU’s approach to computing research set it apart from many other universities at the time.

Thorpe: So, I showed up wanting to work on robots. They gave me a book and the robots were all these robot arms and I thought, “Well, that's not what I want to do.” I want to do mobile robots. The reason I came to Carnegie is I was backpacking with my professors from North Park.

One of them said, “What do you want to do?” I said, “Well, artificial intelligence.” “Oh. You ought to go to Carnegie Mellon. Herb Simon just won the Nobel Prize for inventing AI.” I'll tell you the truth. I had to look up Pittsburgh on a map. I had to go to the library and find out what Carnegie Mellon was. And Carnegie Mellon in the seventies was reinventing itself.

It was a very good Western Pennsylvania engineering school that looked at the demographics of Western Pennsylvania and said, “We're going to be a very small, very good Western Pennsylvania engineering school,” and decided that they needed to go national. Now “national” meant that in 1972, once a year, they would go to Chicago to try to recruit students. And every other year they would go to DC.

So, Dick Cyert, the president, bet his presidency on turning Carnegie into a national brand and really expanded it and grew it. He also, Cyert, was a professor of business and his doctrine was comparative advantage. Find out where you can be one of the top two or three in the world and go for it — and don't try to compete broadly.

And a mid-sized university like Carnegie, that makes a lot of sense. So Raj Reddy was talking with Cyert and convinced Cyert that it was his idea, Cyert's, not Raj's, that we could be top two or three in robotics. And Raj said, “But I'm not interested in playing catch-up small ball. If we're going to do it, let's go big.”

So Cyert asked Raj to run the Institute. He got a million-dollar grant from the Navy and he got a million bucks from Westinghouse. And that was the origins of the Robotics Institute.

Gilliam: And how important was it for him to get a big industry contract and a big public sector contract? Was that specifically the goal? To make sure they had one from each?

Thorpe: Specifically the goal was to do both, really crack into ONR (Office of Naval Research) and then into DARPA because they had a lot of money to do very interesting things. But, also, Carnegie has these deep roots in Western Pennsylvania. And if we could work with Western Pennsylvania industry and really help Westinghouse succeed that was a win all the way around.

This was at a time when the steel industry was visibly dying and trying to figure out what was going to save Western Pennsylvania. They thought it was Westinghouse. It turned out that was not completely the story. Westinghouse had its own financial problems. But that notion of trying to see what we could do locally, what we could do with industry, as well as tapping into the US Government was a big deal.

I, then, interjected to provide some historical context and ask a question.

Gilliam: It's interesting to hear you talk about the comparative advantage, because even Karl Compton, who ran the Princeton physics department, was MIT President for a while…he, at one point, wrote a letter in Science in the 1940s. Essentially, he was trying to be polite as he wrote it, but he said (roughly), “We see the talent going into some of the industrial R& D labs from the physics departments. We feel like, to the man, we have higher average talent, but we are not really touching what they're outputting.”

He thought that the firm structure and the concept of comparative advantage, organizing teams in a way that made sense, is what departments should do. It's what made sense. The concept of 20 different budgets [one for each professor] and 20 different fiefdoms [lab groups] to do small research was silly. It made no sense. It's for pure theoretician-type people. But he later became an MIT president and could not succeed at that.

But it seems like Carnegie in running a lot of this work — it seems like Alan Newell was calling it project style work, the Raj Reddy style of work — it seems like Carnegie succeeded in a lot of that ethos. Would you say that's fair?

Thorpe: So, a lot of this starts with Herb Simon saying, “What can we do really well?”

Herb was a founder of the business school, was a founder of the computer science department, was a founder of cognitive science (the psychology department). Herb then brought in Newell. And the two of them saying, “How do people think and how can we encode that in a computer?”…that sort of set the framework for so much of what has made Carnegie successful.

That whole AI and cognitive psych paradigm…if you look at the growth of the computer science department and then school, there's very good theoretical computer science, but the rest of it is all “AI+”. Yeah, AI + linguistics and you get machine translation. AI + learning and you get computational learning. AI + human interaction and now you get the Human Computer Interaction Center. AI + mechanical engineering and you get robotics.

So, it was the combination of leadership from people like Cyert saying, “I'm going to invest a little bit of money here.” And the intellectual leadership of Simon and Newell saying, “This is going to be a growth area.” Simon and Newell, in turn, hired Raj Reddy, poached him from Stanford. That group of them hired [Takeo] Kanade, poached him from Kyoto. And that sort of set us off and running.

The importance of Raj Reddy, what it means to be ‘project-oriented’, and how CMU utilized its grad students

Gilliam: Could I read you an excerpt from Alan Newell's oral history where he talks about Carnegie Mellon's project-oriented style and Raj Reddy?

Because he uses some terms that I'm sure you're going to hear and you're going to go, “I know exactly what he means!” But to me…I knew what I thought it meant — or maybe it's what I wanted it to mean — so I'd love to run it by you. So I'll read you two remarks and then I'll lead you in with the question.

Alright, so the first remark is:

NORBERG [Interviewer] : You had the money along with Green and Perlis [talking about an extra injection of DARPA funds]. What did you people decide to do with it?

NEWELL: I don't know... support people... just spend it. We didn't decide to do anything with it. One of the features of this environment was that it was decidedly un-entrepreneurial. That seems, in one respect, like a contradiction in terms, but we never took these funds and decided we were going to go out and do big things with these funds. That was an attitude typified the operation around here up until Raj [Reddy] shows up.

And remark two is in reference to the 1970s with DARPA. There were a lot of changing political tides, as you’re aware, and they’re talking about the changing goals of DARPA when DARPA is giving out funds. Newell says:

Newell: ...And so, therefore, we have been pushed towards being applied. A response I have, which is not an attempt to change that, that is exactly what's happened. CMU has gone along with that in spades. We started going along with that in the 1970s. I can remember having conversations with Howard Lackler as we were sitting there under the fixed funds with inflation and still trying to get an extra PDP-10. We were allowing that environment to shape us up so that we were not in fact anything like? And we became project-oriented all in that period. We were not project-oriented in the 1960s at all.

…

Newell:..The corresponding thing is the cats that want to play that game -- the Raj Reddys, the Canatis, and so forth, the Coons -- [find] the game they play is how to do that and do basic science at the same time within the same budget.

So I guess my initial question with that is, as Newell’s talking about ‘project-oriented,’ what does it mean to be project-oriented? How does that differ from business-as-usual AI research on a DARPA grant, the way you might see in a Marvin Minsky lab or something like that?

Thorpe: Oh, so Minsky is a very interesting contrast. Minsky wrote this book, The Society of Mind, where you could read…it was, I don't know, 50 two-page chapters. And you could read it in any order and made just as much sense in any order as in any other order.

That's kind of the MIT notion [presumably Minsky’s MIT AI group] of how ‘mind’ works, and their notion of how research works. It’s that you've got 50 independent projects and, together, something may emerge from that.

Carnegie shows up with people like Raj, who says, “I want to conquer speech. I'm going to put together a big team, and these people are going to be working on the phoneme level, and these people are going to be working on the lexeme level, and these people are going to be working on the syllable level, and these people are going to be working on the word level, and these people are going to be working on the sentence level. And there's going to be an architecture which connects them up and down. And we're going to have to have specialized hardware for all of this to work on.”

And so, Raj says, “Let's build this, this project.” And it was a project that had 16 different dimensions, all of which came together. Now, Raj was also flexible enough to say…when somebody showed up and said, “You know, I've got this idea for a completely different method. Let's not bother with all of the structure. Let's just have a little system where everything sort of learns and it figures out all of those mechanisms.” So that was Hearsay (demo), and that was Harpy (demo), and that was the two different systems.

That competition between big structured AI and machine learning, data-intensive AI has been going on at Carnegie for the last 50 years.

Gilliam: To get very in the weeds here…you talk about putting together a big team. And there's a lot of grad students involved in that. And it seems like on the one hand, ‘project-oriented’…it almost sounds like more of a firm structure than an academic team. A lot of the grad students are the employees. But there seems to be some kind of balance where the grad students are the employees — for example, with the ALV or NavLab where you had “on board grad students” — but also the grad students need a thesis.

With a thesis like yours [working to improve the operating speed of a sensor already in use on a test vehicle]…I could see how your thesis would have folded into a big team with that project's goals quite well. Because you were working on a specific sensor technology, and with Blackboard you could fold all that in. But then there's somebody like Dean Pomerleau [whose 1988 ALVINN system implemented neural net steering on CMU’s vehicle] on the other end of things. It's unclear how his project can mesh with everything.

Can you talk about how those management decisions are made? Because I guess the big goal of what I'm trying to do is to help people understand what the CMU approach is on a management level. So this would be great.

Thorpe: So let me back up and say a couple more things about the big CMU picture, and then I'll talk about Dean and how we organized the NavLab, in particular.

The big CMU picture was fundamentally changed when DARPA sent a telegram —this tells you how long ago it was — saying, “We've got a million bucks to spend. If you can send us a 350-word proposal by the end of the week, we'll send you a million bucks.” (~$5-$10 million today)

And that was the grant in basic research for computer science. And that grant lasted for, I don't know, 15 years or something like that. And even after that grant disappeared, that set the ethos of the computer science department. There's this one big chunk of money that supports all of the graduate students. Therefore, the graduate students are jointly sponsored by the entire department.

Do you know about Black Fridays?

Gilliam: No.

Thorpe: Twice a year, the entire computer science faculty sits down and talks about every graduate student. And the student has written a letter. And they [the faculty] read the letter. And they turn to the advisor and say, “What has your student been doing?” And if the advisor says, “Well, the student is making good progress and they did this and this and this,” and it matches what the letter has said, good. Then the student gets a letter saying satisfactory progress.

If the faculty member says, “You know, I've been trying to work with this guy and he's just so stubborn, he won't do what I tell him to do,” then the faculty write a letter saying, “You must, by next Black Friday, do this and this and this.”

If the student is co-advised and the one advisor says, “Well, I thought that Eric was working on it,” and Eric says, “Oh, I thought Chuck was working on it.” Now the faculty turn on the advisors and say, “You bozos, you have to get your act together and do a better job of advising this guy!” But it prevents one student from being abused by their advisor. If you're not happy with your advisor, because you're sponsored by the department you can change your advisors. But it also prevents one soft-touch advisor from saying, “Aw, poor student, he had a rough semester.” No, the entire department gets together and writes something. And there are these very carefully crafted letters of satisfactory progress, unsatisfactory progress, the dreaded ‘n minus one’ letter, and then being kicked out of the department.

So, the ethos is that we're all in this together; that we've got some joint resources; that the graduate students are joint resources; that we need to properly take care of these students and properly supervise them. So that gives the graduate student a large amount of flexibility. But this responsibility to not just their advisor, but to the entire department.

So that's great because students can find interesting projects. There is the marriage process where you walk in day one and each advisor says, “Here's what I'm up to. Here's how many students I'm looking for.” And then the students get to pick who they would like to work for. And the marriage process matches advisors who have interesting projects with students who are interested in working on them…

That's very different from almost any department that I've seen any place. Almost any place you're an indentured servant to the faculty member who happens to have graduate support. And if you don't get along with your advisor, you have the burden of seeing if there's somebody else who has money who will support you and seeing if you can switch advisors.

So that has set this attitude that we're all in this together. We'll have individual little projects, but we'll also have some of these big projects — like the speech projects — that a lot of people will work on.

Newell himself, at his height, had both his human-computer interaction/user interface stuff, but also his SOAR project — which was a pretty good-sized project with a number of graduate students working on it — trying to figure out how they could all fit together.

Newell said that, “You can do science in lots of different ways. One of the ways you can do it is just by doing something that's an order of magnitude bigger, or an order of magnitude faster. If you can process ten times the number of rules, then you're in a fundamentally different space, and you're doing something different, and the science is different. If you can run your computer ten times as fast, then the nature of the problems that you're dealing with changes in ways that you can't even predict.”

So, doing something 10 times bigger is great. So, he was very supportive of that kind of an attitude.

The Origins of the NavLab

Thorpe: Now, how did we get going on the NavLab? So I was sponsored on the original ONR project to do cool stuff. And one of the cool things that we did was to build Takeo’s direct drive robot arm. Another thing we did was to build this stereo vision for navigating across a room.

Side notion. We were sitting there working one day — graduate students, T-shirts, empty pizza boxes. And we heard the door open and we looked over and there was Raj and President Cyert arguing over who could open the door. And in walks, this Navy admiral in full dress whites with all of the…Nobody had told us the head of ONR was coming to visit…

Larry Matthews, who's now Head of Computer Vision at JPL, Hans Moravec, myself…with robots and pieces. And in walks the head of ONR to see what he's been spending money on. Like, “Guys, if you told us we would have at least cleaned up the lab and probably had a demo ready to run.”

Gilliam: Or put on your good T-shirt.

Thorpe: And put on my good T-shirt! But he was impressed. And many years later I ran into him and he remembered that visit. And so he was happy to see robots.

When I was finishing my thesis, we had a meeting and Takeo asked each of us, “What do you want to do next?” I said, you know, we've been doing a good job indoors. I want to go outdoors and really build driving cars. And when we had gone around and said what we wanted to do next, Takeo came back and said, “Good, because DARPA has this money for Strategic Computing. They're going to be building the world's best computers, and then they need ways of showing it off.”

And, being DARPA, they have to have a way for the Air Force, and a way for the Army, and a way for the Navy… (Thorpe then goes on to explain the Battle Management and Pilot’s Associate systems covered in a prior piece.)…And the Army wants to build a robot scout. “So Chuck, you're in luck because what you want to do next is exactly what DARPA is going to have money for. So let's write a proposal for it.”

We had just hired a guy named Duane Adams. Duane had been Deputy Director of one of the DARPA offices — IPTO. And he came to be the Associate Director of Robotics and to help us write proposals. So, Duane said, “Chuck, start writing.” And we wrote a proposal for what it would take to do road following. And that was the beginning of the NavLab project.

So we submitted the proposal, one for road following and one for the architecture of what the system would have to look like. I went back, finished my thesis, and defended my thesis. I thought I would take a couple weeks off to figure out what I wanted to do next. Raj came up and said, “Congratulations, you passed your thesis. There's a meeting in my office to talk about how we start the NavLab project.” And that was my gap between my thesis and the NavLab project, walking from the defense room into Raj's office to start this meeting, to start planning what we were going to do.

Departmental decisions that made big systems projects feasible

Gilliam: To stop you for a quick second to add to the queue of operational questions. So I have the question of 1) grad students as ‘employees vs. novel thesis generators.’ And now 2) in one of the DARPA year-end reports for the NAVLAB from CMU, I saw them refer to you as a ‘project manager.’ Which is interesting…that's not an academic designation. That sounds very industry. So I would love for you to talk a little about that, too. If I should read into that or what that means to you.

Thorpe: Shouldn't read much into that. But what you should read into is when Newell, Simon, and Reddy really got computer science going — and before that Perlis and Shaw, etc…They said, “If we're going to do big projects,” — and we did big projects like the Andrew Project, that turned into the Andrew File System and things like that. They said, “We need to have more faculty than we have courses to teach. We can support them on soft money, so we need to have research scientists who have full faculty privileges.”

So if you're at MIT and you're not tenure track, you're nobody. Carnegie said, “No, we want to have non-tenure track people, supported on soft money, but who have advising privileges and PI privileges and all of the privileges that faculty have. They just may or may not be teaching courses at this time.” So, unique to computer science, there's this whole research track which is fully equivalent to the tenure track. And that was my underlying title. When I defended my thesis in October, Raj said, “I can't make you a faculty offer. You're going to be a postdoc until July 1 and then I'll make you a research scientist.”

So I came up through the research ranks after having spent that eight months or so as a postdoc. And it's partly that business of trying to figure out what research scientists were that caused computer science to first break out of the Mellon College of Science and be a free-floating department. And then form themselves into a school so they could really have this parallel track.

‘Project manager,’ that was just sort of a title because that's what I was doing on the NavLab project. Raj took me aside. He said, “I don't care how many papers you write. I don't care how many awards you win. That vehicle has to move down the road. If you do that, you're good. I'll defend you. I'll support you, I'll promote you.”

Because I was a graduate student writing this proposal, I couldn't be a PI. So Takeo was a PI and I think Hans Moravec was a PI. And then as I became a faculty, I became a co-PI and then eventually the PI. But it was pretty clear that Takeo was interested in the project because it was a cool project. He was also interested if he could have a few people working on individual projects. But he didn't want to worry about scheduling stuff, and who got time on the machine, and writing reports. So, he was very happy to turn all of that over to me and let me run it the way that I wanted to run it.

Gilliam: And how was that? Can you describe your approach? Let's say, describe your approach to…somebody who knows how an academic lab works usually, but doesn't do anything like this. They output papers, they play the h-index game a little bit, etc.

Thorpe: Well, you pointed out a very key feature here, that the whole system has to work and that each student has to have something that they can claim as their own and that they can write up as their own thesis topic.

So, we got ready to put the system together and I said, “How are we going to see the road? Jill [Crismann], how do you want to do it?” Oh, well, she wanted to work on electrical engineering, signal processing, color classification scheme. “Great, you go do that. Here's what your interface needs to look like. You need to tell me where the road is in the image. If you tell me that. Someone will take that and will steer the vehicle.”

“Karl [Kluge], do you have any ideas?” “I think that we ought to be able to track edges and track color features. You ought to be able to track yellow lines and track white lines.” “Oh, that sounds like a good idea.” So Karl went off to do that. Great. “If you can tell me where the road is in the image, then I'll have someone figure out how to steer it.”

“Tony [Stentz], what do you want to do?” “Well, I really want to steer the vehicle.” “Good. You can do the path planning for that kind of thing.”

“Martial [Hebert],” he was a postdoc who came over from France, “what do you want to do?” Well, he was a 3D vision guy. “Cool! They're sending us a laser scanner. Do you think you could use that to determine where obstacles are?” “Oh, great. If you can find out where obstacles are, then feed that to me.”

“Jay [Gowdy] what do you want to do? I think you're a good systems guy. If someone tells us where obstacles are and someone tells us where the road is, could you figure out how to put the whole system together and give them useful user interfaces?”

So, really trying to build the system in a modular way, so that each person had something that they could do and be proud of. And if it worked, great! Then we plug it into the system and it runs. And if it doesn't work, well, I've got two other people working on other approaches to that same thing.

Gilliam: And if somebody like Dean [Pomerleau] came in with neural nets — wanting to apply Geoff Hinton's somewhat theoretical methods to this — how do you fold them in? Do they sit off a little further to the side in the lab? What does that look like?

Thorpe: Well, first of all, we said, “Neural nets? Oh, come on, nobody's ever built a neural net that does anything. Haven't they proved that neural nets aren't going to work? Um, you want to try it? Sure, try it. Let's see what happens. What would you like? You want some images? We'll collect you some images. Come on in. We'll see.”

And then talking to our people at DARPA, because there was a different and competing office at DARPA doing neural nets, and saying, “Look, I know you're skeptical about neural nets. We've got this neural net guy, separately funded. I want to give him a shot on this. You don't mind if he uses our robot?” “No, that's fine. We'll disprove neural nets once and for all. So come on in and try it out.”

Dean turns out to be an extraordinarily bright person. And you wouldn't know it to meet him, but he's extraordinarily competitive. And he's willing to get his fingers into everything. So, mostly what he wanted was this recording of images and steering wheel position. And then he went off and started to work on that and built a system with 100 hidden units and it seemed like it could work, but really slowly. And so he cut it down to 50 hidden units and it seemed like it could work as well, and a lot faster. He cut it down to 25 hidden units. And he eventually got down to four hidden units, and now he was working in a believable amount of time and it still worked pretty well. He cut it down to three hidden units and it didn't work anymore. So, okay, four hidden units.

Back to this theme of Strategic Computing and DARPA building supercomputers. One of the supercomputers they built was at Carnegie, and it was a thing called the Warp machine. The Warp machine had ten pipelined units, each of which could do ten million adds and multiplies at once. (The Warp machine and BBN’s butterfly processor were briefly covered in an earlier piece in the ARPA series.)

H. T. Kung was working on systolic computing, where things just sort of chunk through the pipeline. And we said, “Here's this big box. Because we're sponsored by Strategic Computing, we'll put it on the NavLab. Can anybody figure out what to do with it?” “Well, it's a really complicated thing and really hard to process.” But Dean could figure out what to do with it. Partly because Dean is a really, really smart guy.

Partly because the inner structure of a neural net is, “Multiply, add, multiply, add.” You take these inputs, you multiply them by a weight, you add them together, you sum it up, run it through your sigmoid function. So, Dean was, as far as I know, the only person… well, that's maybe a bit of an exaggeration. Some of the speech people were able to use the Warp also, because they were doing similar kinds of things…But Dean got the full hundred megaflops of processing out of the Warp machine! And then he put it onto the NavLab and we got the whole thing to work. So, after that, Jill got her thesis for doing her thing. Carl got his thesis for doing his thing. But Dean's neural nets seemed to work in most situations better than either Jill's or Carl's one. And so that's the one that got all the attention.

Going from ALVINN to RALPH and CMU’s complementary systems projects

Gilliam: What did you consider a bigger practical breakthrough? There's obviously the ALVINN system, which to a modern machine learning engineer’s eye, would give them a little tear. “Wow, it's so minimalistic It can do all this (with mostly just neural nets).” And then RALPH comes around. It’s a little more manual. Did you consider RALPH a much bigger practical step? Or once ALVINN came around, did it seem somewhat clear to you that something like RALPH would be possible and better?

So that was part of it, but the other part of it is..how do you take something like ALVINN…which at its core is just turning steering wheels. It's not doing any representation of the world…how do you take that and merge that with obstacle detection and avoidance? How do you take that and merge that with a map? And so, layering architectures on top of this that can take something which shouldn't really have a geometric representation and extract some sort of a geometric representation so we could do other things with it. That was really the fun part of it.

Gilliam: Is that when it became a proper, all hands on deck, CMU-type project — going from ALVINN to RALPH? It sounds like ALVINN was not a one-man job, but smaller. But then maybe RALPH became a proper CMU systems effort.

Thorpe: So Todd [Jochem] came along and worked with Dean. And part of what Todd did was to say, “How do you get some geometry out of this so that you can train multiple different neural nets and switch between them.” So that's even separate from RALPH. That was still in the ALVINN stuff. How do you train this thing up and have things work together? But if you look at the NavLab '90 videotape where we're going from my house to Keith's house, that was the obstacle detection and stopping. That was landmark recognition. That was the annotated map saying when we're supposed to do what. That was high-accuracy dead reckoning when we were doing a sharp turn and ALVINN couldn't work. Getting all of those system pieces to work together was a lot of fun. And this is on top of an experimental vehicle where you never knew what things were going to break.

Gilliam: I’d like to put something on the record, and you let me know if I'm wrong. So I think if some of my readers read what you said, they would say, “Oh, how fortunate that CMU had this systolic array machine going on and that it happened to be able to — obviously some computer structures were somewhat specialized back then — that it happened to be able to work with the natural language project and the vision project.”

But my reading of the history is that this doesn’t seem like circumstance. Now, this was a period when a lot of people weren't pursuing building machines at universities anymore. Obviously, this is not long after Slotnick [and the ILLIAC IV machine] at U of I. And Slotnick's gone on the record some years before saying “The era when you could build big, good machines at a university is over. Like, “this is for industry now, it's not for universities to do.” It seems like CMU’s systems approach helped prolong the era where you could build a good workable machine at a university.

Also I read that the Warp folks made sure that their machine could work with the other ongoing CMU systems efforts like vision and like natural language. Is that fair? Or is that me giving too much credit to the CMU structure?

Thorpe: So the CMU structure, when I got there, they had just finished building CM*, and before that C.MMP. CM* was a powerful array of 50 PDP-11s. But the difference between C.MMP and CM* was they were playing with…if you have multi-processor systems, do you connect a big crossbar switch with every memory connected to every CPU? Or do you connect them in some sort of a hierarchy? And so this notion of building big systems, big hardware systems, was there.

And of course, computer vision has always been a hog for CPU cycles. So in the early days, they built these systems and then they said, “Computer vision people and speech people, have at it!” Because the computer vision people and the speech people knew that we needed the cycles. And we were willing to put up with a fairly klutzy interface in order to see if we could take advantage of these sorts of things.

So, that ethos was there. The names C.MMP and CM* come out of the Newell and Siewiorek book. So here's Al Newell, a pioneer of AI, writing a textbook on computer architecture because he understood that AI needed multiprocessors in order to work. So that ethos was there.

We had a guy in the computer vision group named Rick Rashid, who was working on how you make the hardware more efficient, and how you make the software more efficient, and how you build microkernels. And he's the guy who went out and started Microsoft Research. So there's this long history of computer vision people having to have the highest possible processing power and being willing to put up with a fair amount of klutzy-ness in order to take a wire-wrapped prototype and really use it for for high performance.

Concluding Thoughts: CMU’s applications-focused culture, its origins, and the legacy of its grad students

Gilliam: This has all been fantastic. Is there anything else you think people should understand about how CMU managed its projects? It was a quite exceptional contractor there for a while…To me, CMU in this era seems like one of the all-time great DARPA contractors. Right up there with BBN in the ARPAnet era and Skunk Works when it built STEALTH. In many ways, CMU seems quite similar to BBN.

(Here, Chuck and I went on a 5-10 minute tangent discussing some of his friends from BBN, DARPA’s Clint Kelly, and others from the DARPA Strategic Computing era. Check that out on the audio if you’re interested. But it’s been skipped in this abridged version.)

Thorpe: …Back to Carnegie. Carnegie had a couple of aspects of the culture. One is that doing big things and doing practical things makes a difference. So if you look at what Carnegie has done…Duane Adams, his first proposal he wrote was for the NavLab. The second proposal he wrote was for the Software Engineering Institute. Running an SEI, that's not a typical academic thing.

But there are places like Berkeley. Berkeley runs the Berkeley labs and the Lawrence labs. So running an FFRDC (federally funded research and development center) is something that a handful of universities do, but not everybody. You don't get a lot of published papers out of that, but what you do is you provide a venue to take the basic research you've done and to turn it into practical things which change society.

So the SEI does big software engineering projects for DOD, but they also do a lot of training. They also run the CERT (Computer Emergency Response Team.) So, when some new virus breaks out, SEI jumps in and tells the world what the virus is and how to fix it. And that's the kind of thing that is a little bit unusual for a university to do. But Carnegie had the culture to say, “Making a real impact in society is important. And this is one way you can do it. Let's do it separately, off campus, so we don't dilute the graduate students and writing the papers, etc. But let's have this pipeline.”

Within robotics, NASA came to us, I don't know, 30 years ago and said, “NASA is spending a lot of money on robotics. We're not getting good PR out of it. Partly because our robots are, they go off to places like Mars. Could you take the technology that NASA is developing and work with people like John Deere?” So we formed the Robotics Engineering Consortium. It sits off campus. It employs more staff and fewer graduate students. It can do confidential projects. And it takes basic research done on campus and turns it into practical projects that Deere can take and turn it into products.

So that whole notion of a halfway house to take basic research and turn it into something bigger, something more practical, something applied…that this is a good thing for a university to do…that's not a universal university ethos.

Gilliam: Two-part question. The first is: why do you think CMU was able to maintain that ethos for so long? And the second part is interesting because, now, you’re a university president. You have the benefit of all sorts of institutional knowledge. Why do you think schools struggle to manage projects this way, if it seems, in many ways, to be a natural default approach for technical subjects?

Well, let me give you two reasons why Carnegie succeeded in doing that. The first one, I think, is really more Newell than Simon. Simon wanted to do great intellectual projects. Newell wanted to build things. So Newell caught on that building big stuff and seeing how it worked in the real world was important. And then Raj pushed that even further, starting out with speech. But then also the hardware projects etc. The other reason why Carnegie did this…if you go back in the history, there was the Carnegie Institute of Technology, and there was the Mellon Institute of Science.

The Mellon Institute of Science was the contract research shop. When the Mellons owned Gulf Oil, an aluminum corporation, and big chunks of various steel companies, they needed a chemistry research shop. And so the Mellon Institute was this chemistry research shop, and it existed to do contract research. If you got to produce some papers, that was good, but it was 50 Ph.D. chemists doing industrial research. In the great merger of ‘67, which formed Carnegie Mellon, we absorbed the Mellon Institute and had to figure out what to do with it. And partly what we did with it was to keep it running as a contract research shop.

So, there was already this notion of an off-campus, sponsored by soft money, more practical research shop that we didn't quite know what to do with. But in an ideal world, you would take basic research from the main campus and then figure out how to apply it, etc. So that was a little bit in the DNA of Carnegie since the merger in ‘67.

Gilliam: It's not dissimilar to MIT circa 1920. It had a program called the Technology Plan. For context, when MIT was young — from 1860 to 1920 — they were perpetually poor and living on shoestring budgets. Back then, more than now, MIT really lived on the ethos of, “We serve industry above all else. That’s what we’re here to do”. So, the Technology Plan came about because, at a certain point, they said, “The most honorable thing an institute of technology can do is provide a great service for a fair price.” So they let their applied researchers loose to work a lot of these contracts to the point where, at a certain point, their chemical engineering/applied chemistry department was 75-80 percent industry funded.

So this is very interesting.

Thorpe: Sure. And MIT has this history of spinning out the Draper Labs and the Lincoln Lab. And again, that's stuff that's too applied to be done in a university lab by graduate students, but that should have some connection to the university. Less connection in the case of Draper Labs because of some firewalls. But MIT has some of that same same ethos. Yeah, Carnegie had this very practical streak to it.

Paul Christiano was the provost for a while, and his dad was a Carnegie Tech graduate in civil engineering back when the civil engineering curriculum had courses like bricklaying. This is really hands-on, get out there and get your hands dirty.

Gilliam: Yeah. Back when they used to be able to teach industry machinists at night and things like that, at places like MIT or Carnegie. Different world we live in now.

Thorpe: So, when I was director of the Robotics Institute, I said, “We have won the battle to be the biggest robotics research group. We're very good at producing smart robots. We're very good at producing smart graduate students. We're not very good at producing smart industry. How do we build up a robotics industry in the city of Pittsburgh?” And we started working with the big foundations there. We started working with the little spinoff companies. There were a bunch of them, but none of them were very big. And we started working with the university management to say, how do we pull all of this together?

And when I say the foundations…like the Hillman Foundation, which is one of the richest foundations in the city…John Denny from L. C. Hillman's office came and sat with us and said, “How are we going to build a robotics industry?”And that led to the Robotics Foundry, and that led to Pittsburgh becoming RoboBurgh, which eventually led to these bigger clusters and some federal manufacturing research centers and so forth.

Gilliam: So in that case, do you take a lot of pride in the following? In your one previous oral history, you referred to the early Google group as “Carnegie Mellon West.” You said this talking about how Sebastian [Thrun], who of course, built his DARPA Grand Challenge vehicle at Stanford, but he came up at CMU. And you pointed out how everybody but one in that early Google autonomous driving group kind of came up or had a deep affiliation with CMU. And it seems like that's held even recently. There are a lot of departments that do pretty good autonomous car work, but Uber came to CMU, specifically, to essentially attempt to buy up a whole swath of the university and its projects wholesale to fold into itself.

Do you take a lot of pride in the projects and people that CMU has been outputting being considered applied enough that they could be a direct input into an industrial process? Rather than what the current applied researcher’s credo seems to be at a lot of universities, namely, “Somebody could pick this up and within a handful of years, it can then be applied.”

You all are kind of directly plugging in.

Thorpe: So, Matt Mason — who was on my thesis committee and was my successor as Director of the Robotics Institute — he's a much more theoretical roboticist. And he says, “You know, if all of the applied people left Carnegie Mellon, we would still be a famous robotics institute for all of the more theoretical stuff that we do.”

But they had to kind of live in the shadow because, face it, it's much easier to do a videotape of a robot flying down the road and to explain to the Pittsburgh Post Gazette what it is that we're up to than it is for the theoretical people to say, “Ooh, and look what I can do with a quaternion, I can represent this thing…and this is a fundamental, advance that people are going to be using for the next century!” And they're right! And we are grateful to them. They just have a harder time getting the publicity out of it.

So the DARPA urban challenge was just a family feud. Sebastian and I have a best paper award together. Sebastian and Red [Whittaker, the engineering lead on the DARPA Grand Challenge teams] have a best paper award together. Chris Urmson, who is Sebastian's number two, was Red's graduate student. So this was just a family feud. Those guys happen to be out at Stanford, but they’re still buddies of mine.

(We then talk for a bit about semi-related topics before I ask him if he’s also proud of the number of NavLab/CMU grad students that have gone on to be technical leads at companies or found companies.)

Thorpe: Yeah, you know, I had expected more of my alumni to go off into academia. If you're an academic yourself, you sort of think, “Shouldn't everybody want to do what I do?” And, no! They've gone off and started their own companies. Jill Crismann was the AI guru for the Department of Defense and now has gone back into private industry. Jenny [Kay] went into academia and still teaches. John Hancock went off into the computer game industry, which is a good thing. Hardware keeps breaking for him. So we thought that actually working on real robots was not as good as working on simulated robots.

Parag [Batavia] started his own company. Raul [Valdes-Perez] went off to Google. So it's been fun watching these guys. They have this attitude that, “We can do it! Here's a cool problem. Let me figure out how to make something work. Oh, cool. That works. Uh, now that it works, let me see if I can figure out how it works and write a paper out of it.”

And that's just a fun attitude and fun to watch them go and succeed.

Gilliam: “And if it works, how do I make it usable? How can it be productized?”

Thorpe: The next thing, and the next thing, and the next thing. Some of them have figured out, “How do I take this and turn it into money?” Others have said, “How do I take this and turn it into robots that run on the surface of Mars?”

I cut off the recording there. Over the course of the interview, I came to admire Carnegie’s applied-minded approach to engineering work even more than I did coming into the interview.

Hopefully, you enjoyed this exploration into how CMU managed its exceptional autonomous vehicle research work from the mid-1980s through the early 2000s. CMU’s strategies are, in practice, quite logical. However, comparing their methods to academic norms, it is clear that CMU continually made decisions to go against the academic norm. Doing this, they maintained their major comparative advantage. The result, in the field of computer science and robotics, was a systems research powerhouse that helped shape the field of autonomous driving as we know it today. In many ways, this period of CMU’s history is the closest university example I have seen to the fabled early years of MIT.

Thanks for reading:) See you next time!

Pattern Language Tags:

Utilizing a contractor made up of individuals with research-style goals and training working within a ‘firm’ structure.

Building out an experimental test bed for a research field.

Putting out contractors for specific “integrators” in addition to prime contractors and basic researchers

This piece is a part of a FreakTakes series. The goal is to put together a series of administrative histories on specific DARPA projects just as I have done for many industrial R&D labs and other research orgs on FreakTakes. The goal — once I have covered ~20-30 projects — is to put together a larger ‘ARPA Playbook’ which helps individuals such as PMs in ARPA-like orgs navigate the growing catalog of pieces in a way that helps them find what they need to make the best decisions possible. In service of that, I am including in each post a bulleted list of ‘pattern language tags’ that encompass some categories of DARPA project strategies that describe the approaches contained in the piece — which will later be used to organize the ARPA Playbook document. These tags and the piece itself should all be considered in draft form until around the Spring of 2024. In the meantime, please feel free to reach out to me on Twitter or email (egillia3 | at | alumni | dot | stanford | dot | edu) to recommend additions/changes to the tags or the pieces. Also, if you have any ideas for projects from ARPA history — good, bad, or complicated — that would be interesting for me to dive into, please feel free to share them!

Specific Links:

Chuck Thorpe’s IEEE oral history (video)(transcript)

Red Whittaker’s IEEE Oral History (video)(transcript)

Raj Reddy’s Computer History Museum Oral History (Parts One and Two)

Dean Pomerleau’s 1988 paper, ALVINN: An Autonomous Land Vehicle in a Neural Network