Don Swanson’s career started on a path familiar to many who read this blog. A BA in Physics from CalTech in 1945. A Ph.D. in Theoretical Physics from UC Berkeley in 1952. A decade working in scientific labs. Then his career took a turn when, after a decade of working in traditional scientific labs, Don took a professorship in the Graduate School of Library Science at the University of Chicago. For those keeping track, Don is the first Professor of Library Science mentioned on FreakTakes. But, as weird as it is to say, thanks to some of his trailblazing work and recent progress in the world of computing, it’s very possible he won’t be the last.

Don would become known for developing computing methods to take advantage of the increasingly online nature of scientific papers — leading to discoveries in a field he knew little about, biology.

The first paper in a series that would come to be his career-defining work was released in the April 1986 edition of The Library Quarterly. The paper, titled “Undiscovered Public Knowledge,” is a fascinating piece of first principles thinking that is even more interesting today than it was in 1986 — Swanson’s big idea was not dependent on the technology of his time, but limited by it.

This post is a much longer writeup to an abbreviated response I gave to Michael Retchin when, at coffee, he asked I had any ideas for incorporating LLMs into a research workflow. It is also a bit of a break from my pieces on industrial R&D labs and applied research — which are my primary focus and will be resuming in the coming weeks.

In the first section, I tell the story of Don Swanson and his use of computers to identify undiscovered public knowledge. In the second section, I detail how Mullainathan and Ludwig, recently, have devised a pipeline — enabled by modern ML — to generate novel social science hypotheses.

Then, in the third and final section of this piece, I enlist the help of an actual life scientist — Corin Wagen. Corin gives a practical breakdown of how one could deploy the general ideas covered in the first two sections of this piece to the field of organic chemistry.

The first two sections and the conclusion are written by myself; the third section was written by Corin. Back to the action!

In spite of Swanson's 1986 paper being the first in a very practical series, 22% of the citations in the article cite a non-scientific researcher: Karl Popper. Popper, the great philosopher of science, outlined a model of how science works in practice defined by an interplay of three worlds. Popper's model of these three worlds is what set the stage for Swanson’s simple idea which, in Popper’s era, would not have been at all feasible.

Popper’s model, as Swanson saw it, saw the truth-seeking that made up the real scientific process as a messy and failure-prone process. This messy process is what Gerald Holton referred to as ‘science-in-the-making’ or S₁. While science-in-the-making is much messier than the clean write-ups that appear in journals, it is how great science is actually done.

Swanson further broke down his reading of Popper and how he viewed the body of scientific knowledge contained in existing research, saying:

The question of what is or is not real can in part be fought out on the battleground of critical argument, with various scholars corroborating or correcting the perceptions of others.

Knowledge begins therefore with conjecture, hypothesis, or theory, all of which mean about the same thing. Scientific knowledge grows through testing and criticizing theories and through replacing theories with better ones that can withstand more severe tests and criticism. Thus knowledge is constructed of conjecture, and, though filtered through reality, remains forever conjectural. The sine qua non of science is not objectivity or even "truth," as is often thought, but a systematically self-critical attitude. Scientists are expected to propose testable theories and to be diligent in seeking evidence that is unfavorable to those theories. If they do not criticize their own work, someone else will.

Public criticism and published argument are crucial in helping the scientist create a product that can rise above his own prejudices and presuppositions. Although scientists and scholars may never be objective, the published products they create, shaped and weeded by criticism, can move ever closer to objectivity and truth.

Popper’s model of the three worlds of science contained:

World 1, the physical world. This is the actual world which scientists and engineers concern themselves with.

World 2, researchers’ swirling thoughts about the physical world. This is the subjective world of “mental states and mental processes” that happen in individuals’ heads. World 2 can be taken to mean many things. But, for the sake of this piece, we can take World 2 to mean the collection of personal experiences, ideas, and individual thought processes that pertain to the physical world (World 1) but are not exactly formal scientific theories or fully fleshed-out ideas. The beginnings of theories are based on some vast collection of experiences with the physical world present in any individual researcher’s head. This collection is contained in World 2.

World 3, the (mostly) solidified body of scientific knowledge. This world contains problems, theories, and other products of the human mind. As Swanson noted, “World 3 is real in that, through interacting with World 2, it can influence World 1.”

Popper pointed out the interesting implication that World 3 was created by man, but it could give rise to problems unforeseen by its creators. As one example, man created a number system, but with the number system came “an infinity of unintended and unforeseen consequences, including prime numbers.” Each of these unforeseen consequences awaited discovery. Many of these relevant unforeseen consequences have been discovered, but a countless number of them have also not been.

Swanson reiterated this point, saying:

World 3, while created by man, contains far more than man has ever thought of or dreamed about. World 3 indeed must contain ever increasing quantities of undiscovered knowledge.

The objective state of World 3, and what we subjectively know about World 3, are quite different concepts. So far as either certainty or "truth" is concerned, I shall show that World 3 must be in principle unknowable in the same sense that World 1 is unknowable. In either case, we cannot know, we can only guess. Only a small portion of World 3 is known to any one person. Some things we can perhaps know reasonably well, such as a theory that we ourselves have invented. But, once our invention becomes public knowledge-a bona fide resident of World 3 — it takes on a life of its own.

There are many implications of this conclusion, but the one most relevant to this piece (and Swanson’s work) is that many great, new ideas can be found by a researcher primarily concerning themself and their research process with only the observations in World 3 — rather than in World 1. In Swanson’s eyes, only so much of World 3 is ever knowable to any one researcher or research team’s brain. Computers and new methods of information retrieval, in 1986, were starting to change that. This led to practical implications that Popper's model did not explore — but which Swanson's work would.

Swanson had learned in his now two decades in his adopted world of library science that the role of librarians and their indexing methods were vital to the scientific process. Individual researchers were only familiar with certain aspects of the scientific literature, and librarians had developed methodologies to, as best they could, help researchers identify and gain access to the public scientific knowledge which they did not know how to find. When in this position, a researcher often does not know exactly what they're looking for, how to explore the literatures of related fields, how to account for the fact that some concepts go by multiple terms, etc.

Swanson — and anyone who has ever used a library index — knew the librarians' methodologies were better than nothing, but also extremely limited. Interestingly, he noted, “Information retrieval is necessarily incomplete, problematic, and therefore of great interest — for it is just this incompleteness that implies the existence of undiscovered public knowledge.”

He then went on to explain several hypothetical examples in which advancements in information retrieval could have extremely practicable scientific implications. One of these examples was titled, ‘A Missing Link in the Logic of Discovery:’

A more complex, and perhaps more interesting, example will further illuminate the idea of undiscovered public knowledge. Suppose the following two reports are published separately and independently, the authors of each report being unaware of the other report: (i) a report that process A causes the result B, and (ii) a separate report that B causes the result C. It follows of course that A leads to, causes, or implies C. That is, the proposition that A causes C objectively exists, at least as a hypothesis. Whether it does or does not clash with reality depends in part on the state of criticism and testing of i and ii, which themselves are hypotheses. We can think of i and ii as indirect tests of the hidden hypothesis "A causes C."

In order for the objective knowledge "A causes C" to affect the future growth of knowledge-that is, in order for it to be tested directly, or to reveal new problems, solutions, and perhaps new conjectures, the premises i and ii must be known simultaneously to the same person, a person capable of perceiving the logical implications of i and ii. If the two reports, i and ii, have never together become known to anyone, then we must regard "A causes C" as an objectively existing but as yet undiscovered piece of knowledge — a missing link. Its discovery depends on the effectiveness with which information can be found in the body of recorded knowledge. Notice that "A causes C" is not something once known but forgotten; it is genuinely new knowledge that awaits discovery by explorers of World 3.

Swanson, in this paper, proceeds to put this theory to the test, highlighting an A→B→C relationship which he used his own computing pipeline to identify. In this case, A was fish oil, B was the reduction of platelet aggregability and blood viscosity, and C was the risk of Raynaud’s disease. In the literature, it seemed that A caused B. In the literature, it seemed that B might counteract C. But there were no papers implying that A counteracted C.

Maybe it had been tried, failed, and not published due to publication bias. Maybe it was a silly idea for a reason that Swanson, not a biologist, was unaware of. Or maybe this was an idea that was brand new to World 3 and worthy of empirical testing by scientists who concerned themselves with World 1.

So, Swanson wrote the idea down and proposed that any interested life sciences research team test the hypothesis. It turned out that he was somewhat onto something! A paper published in the following years concluded:

We conclude that the ingestion of fish oil improves tolerance to cold exposure and delays the onset of vasospasm in patients with primary, but not secondary, Raynaud's phenomenon. These improvements are associated with significantly increased digital systolic blood pressures in cold temperatures.

Swanson’s most well-known finding using this A→B→C methodology, published two years after this initial paper, pertained to magnesium and the alleviation of migraines. The paper, titled “Migraine and Magnesium: Eleven Neglected Connections,” explored eleven possibly unnoticed connections between the pair of literatures on migraine and magnesium.

In the introduction of this paper, Swanson breaks down the necessity of this style of work even more clearly:

Undocumented connections arise neither by chance nor by design but as a result of the inherent connectedness within the physical or biological world; they are of particular interest because of their potential for being discovered by bringing together the relevant noninteractive literatures, like assembling pieces of a puzzle to reveal an unnoticed, intended, but not unintelligible pattern. The fragmentation of science into specialties makes it likely that there exist innumerable pairs of logically related, mutually isolated literatures.

Swanson then goes on to point out a litany of A→B→C style connections that connected A, magnesium levels, to C, the presence/severity of migraines. In spite of a number of connections, the number of papers in the literature that mentioned A and C together was vanishingly small. The eleven factors relevant to both migraine physiopathology and the physiological effects of magnesium were: type A personality, vascular tone and reactivity, calcium channel blockers, spreading cortical depression, epilepsy, serotonin, platelet activity, inflammation, prostaglandins, substance P, and brain hypoxia.

So, naturally, Swanson recommended that researchers in the medical community that concern themselves with World 1 look into this relationship to see if there was causality. And, once again, it seems that Swanson and his methods were vindicated. While magnesium treatments did not prove to be a silver bullet for migraine sufferers — obviously migraines still exist — magnesium has since become a remarkably common treatment to help alleviate migraine symptoms. A paper as recently as 2012, titled “Why all migraine patients should be treated with magnesium,” argued, “The fact that magnesium deficiency may be present in up to half of migraine patients, and that routine blood tests are not indicative of magnesium status, empiric treatment with at least oral magnesium is warranted in all migraine sufferers.”

Even if the reader is not impressed with those findings in and of themselves, the findings should surely be taken seriously as an extremely convincing proof of concept of the idea that undiscovered ideas are out there and can be practically discovered with the right scientific area and computationally-augmented workflow. These new hypotheses done using data from World 3 can then be tested and verified/rejected using traditional scientific methods on World 1.

These pipelines will never be perfect. And using them well may always be — as is the case for much of computing — more of an art or messy engineering process than a pure science. As Swanson pointed out, the problem of information retrieval is inevitably incomplete and is destined to not be perfectly efficient. But the areas of computing relevant to this problem area have improved by many orders of magnitude in the years since Swanson…and, as readers have surely noticed, to a shocking degree in the past year or two.

New functionality exists, and much is possible now that was not before. The pipeline which Swanson used to generate his insights was remarkably primitive by modern standards.

I'll (briefly) break down his process to give the reader an idea. For various diseases, such as migraine, Swanson scanned a Medline file in which 2,500 article titles contained the word “migraine.” One of those titles referred to a possible relation between spreading depression to the visual scotomata of classic migraine. Then, as Swanson detailed:

The phrase “spreading depression” is next explored on its own, by examining titles in which it occurs and reading the literature itself is necessary. One can discover among other things that magnesium in the extracellular cerebral fluid can prevent or terminate spreading depression. Combining this new fact with the above connection, one is led to the conjecture that magnesium deficiency might be a causal factor in migraine.

A surmise that migraine might be a vasospastic disorder led to another connection with magnesium. By examining several hundred titles containing the word “vasospasm,” one can notice a few titles indicating that magnesium deficit can cause vasospasm. That discovery can be made more efficiently if one guesses at the outset that a deficiency of some kind might be implicated. By requiring the Medline subheading “deficiency” to appear in the descriptor field, one can narrow the several hundred titles with “vasospasm” down to four, of which three are about magnesium.

Swanson points out that, of course, this methodology did not lead directly or exclusively to magnesium. It led to many other guesses, most of which were discarded. The biggest reason for hypotheses being weeded out was simply that two terms tended to be mentioned in the same article frequently. Swanson’s heuristic took that to mean the hypothesis had already been examined.

Swanson later put together a computer system called Arrowsmith which attempted to make carrying out these steps easier, but it never became widely used. This system was inevitably quite manual. And the use of computers in day-to-day knowledge exploration tasks was both not as common today and the machines themselves were much less capable.

But Swanson’s core idea should live on! Yes, his methods were simple. And, yes, his findings were not Nobel-winning (or anything close). But one can only imagine what he may have attempted had modern text processing, tf-idf scoring, or cosine similarity existed in 1986. Swanson only had keyword searching, manual skimming, and the faint idea that this should be possible available to him.

We now live in a different world — one Swanson only could have dreamed of. The task of rooting out undiscovered public knowledge and aiding human researchers in assembling this information into real-world scientific discoveries is absolutely something that, now, should be very seriously re-visited.

With the improvements in LLMs, it seems we are living in a world where language models — whether or not they ‘understand’ in a literal sense — have capacities that often mimic human capacity to understand conceptual phenomena beyond the literal words on a page. Creative minds can likely find ways to make use of this fantastic new tool in the hypothesis-generating process. Surely, at the minimum, Swanson's A→B→C methodology to find undiscovered public knowledge in World 3 is one viable way to do this. But I imagine even more fruitful frameworks for deploying modern computing tools to accomplish similar goals are out there to be discovered as well.

This will be explored in more detail in Corin's section which contains some ideas on how this could apply to modern organic chemistry research. But, first, I'd like to explore a recent paper on machine learning as a tool for hypothesis generation. The paper seeks to generate new hypotheses in a common area of computational social science, causes of bias in bail decisions, using non-LLM methods in modern ML — image recognition, morphing, and GANS.

Ludwig & Mullainathan’s “Machine Learning as a Tool for Hypothesis Generation”

Jens Ludwig and Sendhil Mullainathan recently released a working paper on machine learning as a tool for hypothesis generation. The paper demonstrates that a very different future of hypothesis generation could already be upon us — in very specific areas at least.

The authors open with a beautiful description that outlines how messy, human, and creative hypothesis generation usually is. It is a description that does a good job of unpacking the kind of activities that tend to reside in Popper’s World 2. The authors open the paper, writing:

Science is curiously asymmetric. New ideas are meticulously tested using data, statistics and formal models. Yet those ideas originate in a notably less meticulous process involving intuition, inspiration and creativity. The asymmetry between how ideas are generated versus tested is noteworthy because idea generation is also, at its core, an empirical activity. Creativity begins with “data” (albeit data stored in the mind), which are then “analyzed” (albeit analyzed through a purely psychological process of pattern recognition). What feels like inspiration is actually the output of a data analysis run by the human brain. Despite this, idea generation largely happens off stage, something that typically happens before “actual science” begins. Things are likely this way because there is no obvious alternative. The creative process is so human and idiosyncratic that it would seem to resist formalism.

That may be about to change because of two developments. First, human cognition is no longer the only way to notice patterns in the world. Machine learning algorithms can also notice patterns, including patterns people might not notice themselves. These algorithms can work not just with structured, tabular data but also with the kinds of inputs that traditionally could only be processed by the mind, like images or text. Second, at the same time data on human behavior is exploding: second-by-second price and volume data in asset markets, high-frequency cellphone data on location and usage, CCTV camera and police “bodycam” footage, news stories, children’s books, the entire text of corporate filings and so on. The kind of information researchers once relied on for inspiration is now machine readable: what was once solely mental data is increasingly becoming actual data.

We suggest these changes can be leveraged to expand how we generate hypotheses. Currently, researchers do of course look at data to generate hypotheses, as in exploratory data analysis (EDA). But EDA depends on the idiosyncratic creativity of investigators who must decide what statistics to calculate. In contrast, we suggest capitalizing on the capacity of machine learning algorithms to automatically detect patterns, especially ones people might never have considered. A key challenge, however, is that we require hypotheses that are interpretable to people. One important goal of science is to generalize knowledge to new contexts. Predictive patterns in a single dataset alone are rarely useful; they become insightful when they can be generalized. Currently, that generalization is done by people, and people can only generalize things they understand. The predictors produced by machine learning algorithms are, however, notoriously opaque — hard-to-decipher “black boxes.” We propose a procedure that integrates these algorithms into a pipeline that results in human-interpretable hypotheses that are both novel and testable.

The problem area on which the authors chose to test their new method is one they know well: biased bail decisions.

Contextualizing the Problem

In recent years, Mullainathan, Jens Ludwig, and a handful of other researchers who straddle the worlds of social science and computer science have been producing a widely-followed series of papers on algorithmic bias. Many of the papers in this area focus on bail decisions. It is a somewhat ideal environment in which to do this kind of research, all things considered. The outcome variables are public, there is a lot of (bias-prone) human discretion from judges’ decisions, statistical algorithms are sometimes used to determine risk scores to jail or release defendants, both numerical data and text are available on the crimes, and additional data is available about the defendants themselves — even their photos in some cases.

Researchers have been utilizing this data to ask and answer questions such as: Can we produce bail algorithms that are more accurate than judges?, Can we produce bail algorithms that minimize seemingly unfair racial bias?, or What features seem to be most associated with unfair treatment from judges?

The latter question is the one that Ludwig and Mullainathan chose to be their subject of investigation in this paper: Can we use machine learning to help generate new theories for what features of a defendant seem to bias a judge one way or another? This question is particularly interesting because, as in many areas with large datasets available, the machine learning algorithms far outperform the hypothesis-based statistical models put together by people like economists — which are often regression models.

The machine learning algorithms are clearly seeing some patterns that are just not captured by existing hypotheses. So, Ludwig and Mullainathan set out to see if they could get similar neural net-based approaches to unpack what these complex models were seeing.

And…it worked. Their methods discovered hypotheses that were interpretable, novel, and seemingly right.

The disparity between ML models and regression models in this area

When the researchers built deep learning models to predict judges' decisions, the defendants mugshot photos proved to be extremely predictive of judge behavior. This, on its own, accounted for a quarter to half of the predictable variation in detention — depending on whether one uses R-squared or AUC. Contextualizing this for the readers, the authors write, “Defendants whose mugshots fall in the bottom quartile of predicted detention are 20.4 percentage points more likely to be jailed than those in the top quartile. By comparison, the difference in detention rates between those arrested for violent versus non-violent crimes is 4.8pp.”

Of course, some percentage of what the algorithm was ‘seeing’ in the photo was inevitably going to be things that have already been hypothesized and accounted for in the existing social science literature and their regression models. Some of these prior hypotheses include things like: gender, race, race, skin color, and other facial features suggested by existing research.

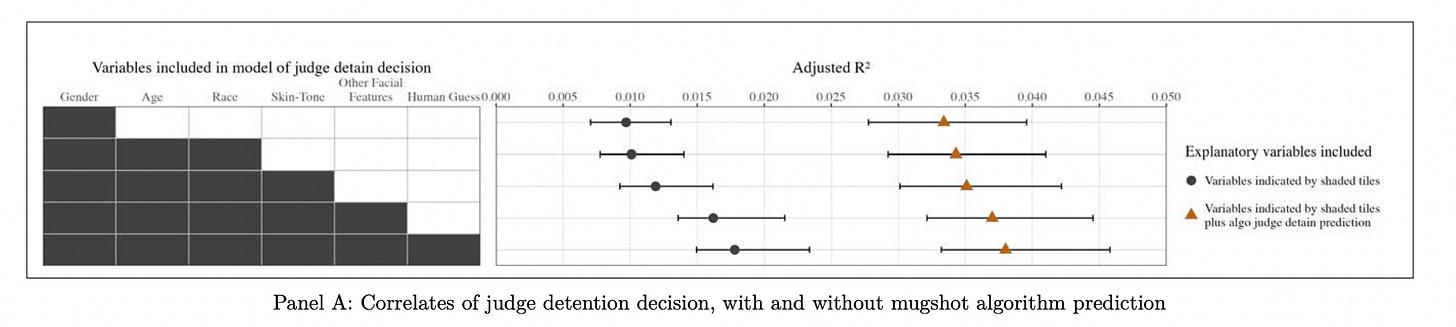

Panel A of Figure I in the paper demonstrates that the authors' ML algorithm is finding far, far more than what the causally-focused models account for. Even if one adds in a column that accounts for human guesses of judge behavior based on the photos, only statistically accounting for those factors does not come close to the algorithm’s prediction of judge behavior. In the figure below, the panel on the left indicates which variables are being controlled for in the rightmost panel where the R-squared achieved by the existing hypotheses in the literature is compared to the R-squared of a machine learning algorithm using that information plus the mugshot pixel information.

Now, while impressive, that result is not in and of itself groundbreaking. We have known for a while that basic statistical methods such as OLS often cannot touch the performance of machine learning algorithms when dealing with high-dimensional data such as cell phone data, online clickthrough data, images, text, etc.

What has vexed us is the ability to interpret what’s happening in a human-explainable way. Even attempting to understand what neural nets are doing as they learn primitive tasks such as basic addition is a handful for remarkably intelligent researchers. Explainable hypotheses on applications like this, many feel, have seemed quite out of reach in the near term.

But that is exactly what the authors succeed in doing a convincing job of. In the end, they are able to produce a very similar chart to the one above — demonstrating marked improvements based on new, human-interpreted, ML-based hypotheses rather than just a black box algorithm.

Their new pipeline, in part, leverages M-Turkers learning what the algorithm was seeing. The M-Turkers' descriptions of what the algorithm was seeing — in word form — became hypotheses. These hypotheses were fed to separate M-Turkers who added those hypotheses — as text labels — to the test set. And the basic statistical model — similar to the simple ones often used in social science literature — was able to achieve substantial increases in performance by controlling for these new machine-discovered and human-interpreted features.

Let’s explore how exactly they did this in more detail. (Feel free to only skim the steps of the pipeline section if you don’t care to know the details.)

The Pipeline

This section contains a condensed version of the steps that made up the authors' workflow for generating human-interpretable hypotheses leveraging machine learning methods. Please check out the paper if you’d like to know the minutiae of how certain individual steps were performed. (The paper plus appendix came out to 130 pages, so the authors really do get into a lot of detail on all the steps for those curious.)

Step 1: Identification of a statistical trend of interest

In this case, specifically, the authors built a supervised learning algorithm — with an outcome variable based on judges' bail decisions — and identified that a surprising amount of the predictable variation in judges’ decision-making came from the defendants' faces. They did this using an ensemble approach with gradient-boosted decision trees on the structured administrative data (current charge, prior record, age, gender, etc.) and a convolutional neural net trained on the raw pixel values from the mugshots.

Step 2: Checking if the trend of interest is ‘unknown to the literature'

This is the step displayed in the graphic in the preceding section — comparing the R-squared of the ML approach to the simpler models which control for hypotheses from the social science literature. The authors ran an OLS regression controlling for the variables that represent positive hypotheses in the existing literature to see how much of the variation, if any, that the ML ensemble method identifies that is not accounted for by these variables. The answer was: a lot.

To do this, for this particular use case, the authors needed to employ people to label some images with things like race, skin tone, etc. What this step looks like will vary based on the application. But what matters for this high-level overview is that the authors did what they needed to do to label the data so previously proven hypotheses could be controlled for. With that done, the algorithm was still finding a ton of predictable variation that those variables did not account for.1

Step 3: Morphing

This step is the most pivotal (and technically complex) of the paper. The authors write:

The algorithm has made a discovery: something about the defendant’s face explains judge decisions, above and beyond the facial features implicated by existing research. But what is it about the face that matters? Without an answer, we are left with a discovery of an unsatisfying sort. We will have simply replaced one black box hypothesis-generation procedure (human creativity) with another (the algorithm).

The authors need to find a way to unpack this in a human-understandable way. That is the crux of the paper. First, they explain why common computer science methods like saliency maps do not do the job for cases like this.

A common solution in computer science is to forget about looking inside the algorithmic black box and focus instead on drawing inferences from curated outputs of that box. Many of these methods involve gradients…The idea of gradients is useful for image classification tasks because it allows us to tell which pixel image values are most important for changing the predicted outcome.

For example, a widely used method known as “saliency maps” uses gradient information to highlight which specific pixels are most important for predicting the outcome of interest (Baehrens et al., 2010; Simonyan et al., 2014). This approach works well for many applications like determining whether a given picture contains a given type of animal, a common task in ecology (Norouzzadeh et al., 2018). What distinguishes a cat from a dog? A saliency map for a cat detector might highlight pixels around, say, the cat’s head: what is most cat-like is not the tail, paws or torso, but the eyes, ears and whiskers. But more complicated outcomes of the sort social scientists study may depend on complicated functions of the entire image.

The authors elaborate:

In the cat detector example, a saliency map can tell us that something about the cat’s (say) whiskers are key for distinguishing cats from dogs. But what about that feature matters? Would a cat look more like a dog if its whiskers were, say, longer? Or shorter? More (or less?) even in length? People need to know not just what features matter but how they must change to change the prediction. For hypothesis generation, the saliency map under-communicates with humans.

For something like a human face’s age, age is a function of almost all parts of our faces. So, a saliency map highlights almost everything. That isn't helpful.

So, the authors pursue a “morphing” procedure. Instead of highlighting pixels, they change them in the direction of the gradient of the predicted outcome. This, essentially, creates a new synthetic face that has a different predicted outcome. They describe the idea as follows:

Our approach builds on the ability of people to comprehend ideas through comparisons, so we can show morphed image pairs to subjects [M-Turk workers] to have them name the differences that they see.

The top row of the following graphic shows how, for a factor like age, the saliency map covers the entire image. The bottom panel of the graphic demonstrates the authors' morphing procedure done in a way that seeks to increase predicted age — just as an example.

The authors constrain their morphing procedure in such a way that it attempts to change as little as possible about the images while maximizing the detention risk of the synthetic images. In addition, they also construct the procedure in such a way that the morph produces differentiation orthogonal to traits that are already accounted for by hypotheses in the existing literature. In short, these are new faces are minimally morphed to achieve a maximal increase in the probability of certain bail decisions. And they have been morphed in such a way that the traits that have been changed are not traits accounted for in the existing literature.2

Step 4: Humans prove they can learn new, predictive features from the morphs

The researchers then ask M-Turkers to look at image pairs — with one morphed image morphed to have a higher predicted harshness of sentence than the other. These subjects, after being given a short set of images and correct answers to have a chance to ‘learn’ the pattern, were then shown many pairs of images and asked to guess which image expresses a higher likelihood of a harsh bail decision. The M-Turkers, on average, improved from more or less 50/50 guessing at the start of the round to a 67% accuracy rate by the end of the round.

You can imagine the following image — with the caption under the photos showing the correct answer — representing what the training process looked like.

As a point of reference: the authors also generated the following ‘intermediate steps’ for that particular photo to give us an idea of what the image morphs look like for detention probabilities between .41 and .13

Step 5: Humans name the heuristics they were using to make more accurate predictions

Next, given a set of humans clearly just demonstrated that they were learning the differences between the morphs somehow and using the knowledge to make more accurate predictions, the researchers simply asked them to describe what they were doing.

The M-Turkers would have just looked at many pairs of images that look like those below and been asked to describe what traits separate one column from the other.

The authors gave these text descriptions to separate research assistants and asked them to create basic category labels that captured the mostly synonymous human descriptions. The humans' unstructured text comments were obviously a bit noisy, but the authors show that — even in a word cloud — a pretty clear first hypothesis starts to emerge. Some of the most common words are things like “cleaner,” “shaved,” “scruffy,” “moustache,” and “shorter.”

The researchers called this feature “well-groomed.” According to the humans who were making accurate predictions, being well-groomed helps a lot!

Step 6: Checking the new hypothesis' validity by adding it into the regression model

Now, with this new hypothesis in place, the next step is to check if it holds up in practice. So, in the next step, the researchers asked a new set of 343 humans to label over 32,000 mugshots in the training and validation set according to how well-groomed they were on a 9-point scale. Once they did this, the authors found that this well-groomed variable was very highly correlated with the algorithm's predictions. A 1-point increase in standard deviation was associated with a 1.74% decline in predicted detention risk. A 1.74% decrease in detention risk is a 7.5% reduction in the base rate.

The new variable also significantly increased the predictiveness of the new version of the full model. The authors break down the explanatory power of the new variable, saying: “Another way to see the explanatory power of this hypothesis is to note that this coefficient hardly changes when we add all the other explanatory variables to the regression…despite the substantial increase in the model’s R-squared.”

With that, it seems they've found a new, ML-enabled, human-interpretable hypothesis that is likely correct: being well-groomed seems to substantially bias judges in your favor.

Step 7: Iterating

Next, the authors did it again!

The cool thing about this procedure is that it's iterable. This well-groomed variable explains some of the variation in the algorithm’s prediction of the judge, but not all of it. So, the authors continue with the above steps — now with a defendant’s well-groomed-ness labeled in the dataset alongside things like race and charge — and attempt to find another novel hypothesis orthogonal to what has already been labeled and accounted for in the data.

The authors write:

Note that the order in which features are discovered will depend not just on how important each feature is in explaining the judge’s detention decision, but also on how salient each feature is to the subjects who are viewing the morphed image pairs. So explanatory power for the judge’s decisions need not monotonically decline as we iterate and discover new features.

To isolate the algorithm’s signal above and beyond what is explained by well-groomed, we wish to generate a new set of morphed image pairs that differ in predicted detention but hold well-groomed constant. That would help subjects see other novel features that might differ across the detention-risk-morphed images, without subjects getting distracted by differences in well-groomed.

At this point, they note that this iteration procedure raises several technical challenges because, if they used the same procedure as in the first step, they would be orthogonalizing against predictions of well-groomed rather than objective well-groomed-ness — and orthogonalizing against a prediction can be an error-prone process. So, instead, they built a new detention prediction algorithm curated on a training set that was limited to pairs of images matched on the features that they were looking to orthogonalize against. In this particular step, that meant that they could, as they put it, “use the gradient of the orthogonalized judge predictor to move in GAN latent space to create new morphed images that have different detention odds but are similar with respect to well-groomed.”

These new images, shown below, were then put through the same steps as before. The authors also note that — as you will see — the morph generator did not do a perfect job of orthogonalizing against well-groomed-ness. But what is important is that there is still what they believe to be “salient new signal.”

With these image pairs, a second new salient facial feature comes to light in the eyes of the M-Turkers: how “heavy-faced” or “full-faced” a defendant is.

In fact, heavy-faced seemed to be even more important than well-groomed was. A one standard deviation improvement in the well-groomed variable was associated with a 7.5% reduction in the base rate. A one standard deviation improvement in the full-faced variable was associated with a 9.3% improvement.

The authors, once again, have the M-Turkers go through and add this label to the relevant dataset so they could run the regression model controlling for this new variable as well. Once again, it yielded an improvement.

The authors conclude their section on ‘Iteration,’ saying:

In principle, the procedure could be iterated further. After all, well-groomed, heavy-faced plus previously known facial features all taken together still only explain 27% of the variation in the algorithm’s predictions of the judges’ decisions. So long as there is residual variation, the hypothesis-generation crank could be turned again and again. But since our goal is not to fully explain judges’ decisions but rather to illustrate that the procedure works and is iterable, we leave this for future work.

Step 8: Evaluating the new hypotheses on true outcome data — rather than predicted outcome data

Before this can be considered a success, one has to check if these new hypotheses are good predictors of actual judge decisions. Up until this point, the models were largely being validated on the (imperfect and noisy) ML predictions of judge predictions rather than actual judge decisions. So, this step is the one that really matters. Well-groomed and full-faced could be correlated with some part of the algorithm that is actually unrelated to actual judge behavior.

When the authors did this, building a regression model using all of the original variables as well as well-groomed and full-faced, they noted that “the explanatory power of our two novel hypotheses alone equals about 28% of what we get from all the variables together.”

The gray dot in the bottom-most row in the right panel demonstrates the improvement of the regression model when well-groomed and heavy-faced are controlled for. The magnitude of improvement when including both of these variables is similar to when skin tone was found to matter more than race in many cases — which was considered a very significant and not necessarily intuitive finding made by humans in this field of social science.

The implications of all this

After running an experiment at a public defender’s office and legal aid society, the authors determined that it did not seem that the practitioners previously understood the extent to which these newly-discovered characteristics correlated with outcomes. The authors noted that they were mentioned here and there by some of the practitioners, “but these were far from the most frequently mentioned features.”

The researchers also had the practitioners guess which of a pair of images was more likely to be detained — just as the authors did with M-Turkers. They found that the effect of the practitioners' guesses in the performance of the full model was four times as large as the M-Turker guesses. In fact, the practitioners' guesses were about as accurate as the algorithm itself. The researchers elaborated on the relationship between the practitioners' predictions and the algorithm's predictions, saying:

Yet practitioners do not seem to already know what the algorithm has discovered. We can see this in several ways in Table VI. First, the sum of the adjusted R-squared values from the bivariate regressions of judge decisions against practitioner guesses and judge decisions against the algorithm mugshot-based prediction is not so different from the adjusted R-squared from including both variables together in the same regression…We see something similar for the novel features of well-groomed and heavy-faced specifically as well. The practitioners and the algorithm seem to be tapping into largely unrelated signal.

This pipeline — leaning heavily on neural nets and a human-feedback loop leveraging basic human competencies — generated a new, good idea.

While Swanson’s idea was an example that used primitive technology in the general area to which LLMs can contribute — text-based idea discovery — Mullainathan and Ludwig demonstrate how modern neural nets can be leveraged to generate clever theories in a completely different realm of application.

Is what the computer is doing creativity? I’d say no…but I’d also say it doesn’t matter. The increased capacity of modern computing tools simply is. It's up to us to find ways to use the new capacities well. If researchers use them well, it should free up human minds to focus their efforts on entirely new areas of creativity than they have before. This won't impact all hypotheses. Many hypotheses will come to be in the same ways that they always have. But, hopefully, many hypotheses will also come to be through new combinations of humans and computing. Both Don Swanson's work from the 1980s and the morphing work of the more recent authors lay a fantastic base for understanding what is now possible in this realm.

Researchers should be taking advantage of these ideas wherever they can! In line with this goal, the following section contains one researcher's ideas for possible applications of these ideas which could be fruitful in his own field: organic chemistry.

The ideas are largely inspired by Swanson’s A→B→C methodology and the work that led to the (recent) second Nobel Prize in Chemistry for Karl Sharpless.

Can LLMs help enable 100 more Karl Sharplesses?

Tools that accomplish Don Swanson-like tasks are particularly useful in areas of science in which the capacity to read and remember a large volume of research papers — from all languages and time periods — is a distinct advantage. You could think of this as having a bunch of really dumb research assistants who are very well-read and have great memories — but they're still dumb. One current research area in which this would be a distinct advantage is the field of organic chemistry.

The rest of this section was written by Harvard Ph.D. organic chemist, blogger, and current founder, Corin Wagen. Corin was the first person to tell me about Karl Sharpless' idea-generation process. Corin, after reading a draft of the Don Swanson and Sendhil & Ludwig sections, set out to write a section describing how he thought those ideas could fit into his own field in the near-term and make a big difference.

Several factors make organic chemistry a promising domain for LLM-assisted hypothesis generation. Organic chemistry is a very old field, with almost two centuries of publications to sift through, and there are a lot of venerable results which have been forgotten and unearthed with great fanfare (vide infra). Additionally, it’s relatively easy to validate the accuracy of previous results in organic chemistry by reproducing the reactions—few organic reactions require bespoke instrumentation, and there’s an expectation that one lab’s reactions ought to be easily reproducible by other labs (e.g.).

The work of K. Barry Sharpless illustrates how knowledge of the old literature can allow for important breakthroughs today. Since around 2000, Sharpless has been advocating for a new paradigm in chemical synthesis, termed “click chemistry,” for which he was co-awarded the 2022 Nobel Prize. In his words:

The goal [of click chemistry] is to develop an expanding set of powerful, selective, and modular “blocks” that work reliably in both small- and large-scale applications. We have termed the foundation of this approach “click chemistry,” and have defined a set of stringent criteria that a process must meet to be useful in this context. The reaction must be modular, wide in scope, give very high yields, generate only inoffensive byproducts that can be removed by nonchromatographic methods, and be stereospecific (but not necessarily enantioselective).

This approach aims to avoid many of the problems that render chemical synthesis so inefficient and capricious today, and thus accelerate chemical discovery. But it’s not easy to find reactions with the desired characteristics: to date, Sharpless has focused on two reactions: copper-catalyzed azide–alkyne cycloaddition (CuAAC) and sulfur(VI) fluoride exchange (SuFEx). Importantly, both of these reactions were discovered long ago: CuAAC was developed by Rolf Huisgen in the 1960s (ref), while SuFEx dates from the 1920s German literature (ref).

These reactions, thus, are prime examples of undiscovered public knowledge. In both cases, the actual experimental results had been published for many decades, yet their relevance to modern synthesis wasn’t realized until someone (Sharpless) put the pieces together. And it wasn’t just the novelty of the click chemistry concept that led to these results being forgotten: the original click chemistry paper was published in 2001, yet SuFEx wasn’t rediscovered until 2014, meaning that there was about a decade in which anyone could have recognized that these 1920s sulfur(VI) reactions satisfied the demands of click chemistry, and yet no one did.

What makes Sharpless so successful? In part, it’s his vision for how chemical synthesis could be different — having the creativity and courage to be iconoclastic and push the field in a new direction. But it’s also his ability to discover and remember old results that fit with his vision that’s made him so successful. It’s not really possible to sit down and design a reaction that satisfies a list of desired properties; it’s much more effective to simply look through all previous reactions and find one that meets your criteria.

If we consider a cartoonish version of the Sharpless production function, then, we might arrive at the following list:

Have a bold vision for the future of the field.

Read a lot of old papers and remember them.

Speak German.

Have a good “eye” for promising old results that might fit into your vision.

Attributes 1 & 4 are likely to remain the domain of humanity for the foreseeable future — it’s silly to expect LLMs to develop good taste or a keen scientific vision. But attributes 2 & 3 are ripe for automation: an LLM can digest papers much more quickly than a human, and in far more languages than any scientist can learn. Perhaps there are future potential Sharpless-type scientists out there who have been rate-limited by the speed at which they read, or their inability to speak German!

Unfortunately, application of LLMs to these questions is hamstrung by the fact that ChatGPT and its ilk don’t have access to most research papers, which are stuck behind publisher paywalls. But one could imagine LLMs being very useful research assistants if this limitation were removed. To demonstrate, I asked ChatGPT to suggest a click reaction of allenes, another bench-stable but high potential energy functional group that (to my knowledge) no one has proposed a click reaction for. ChatGPT responded with a very promising lead, the “allene–amine coupling reaction”:

This answer is quite good: ChatGPT identifies that the reaction is highly chemoselective, displays excellent functional group tolerance, and proceeds in water as a solvent, all qualities that match the criteria for inclusion as a click reaction. Unfortunately, the reaction doesn’t exist: Google doesn’t return any results for “allene–amine coupling,” the closest examples I can find don’t match the descriptions given at all (poor functional group tolerance, not compatible with water), and the references ChatGPT gives me upon further interrogation are completely made up.

Despite this failure, the response is encouraging. ChatGPT clearly understands what it’s supposed to be looking for (type of reaction, behavior with different functional groups, solvent compatibility), but is hamstrung by the fact that it doesn’t actually seem to know chemistry beyond the level of Wikipedia. This is a pretty fundamental limitation of LLMs on their own, but not an insurmountable one: the use of retrieval augmentation and similar techniques has been demonstrated to allow ChatGPT and other LLMs to reason much more accurately based on extant data, and there’s no reason that scientific data ought to behave any differently.

While there are plenty of (proprietary) ways to search the literature based on chemical structures (like SciFinder and Reaxys), the ability of LLMs to perform semantic search opens the door to a wider variety of applications. A chemist who knows the properties they wish their reaction to have, but not the reagents involved, would be hard-pressed to use state-of-the-art tools to find an answer. But one could imagine a version of ChatGPT equipped with the ability to semantically query a database of patents and scientific publications (with the information stored in a machine-friendly way) being an extremely useful research assistant to the practicing organic chemist. Armed with such a tool, a chemist could ask plenty of useful questions:

“I’m looking for photochemical cyclization reactions that generate piperidines, published before 1960.”

“Robust and functional-group tolerant reactions that couple allenes to another molecule and work in water.”

“Regioselective cycloadditions of bench-stable starting materials that occur at room temperature”

These prompts are presently very challenging to answer, limiting the ability of scientists to locate relevant literature results, but hypothetically ought to be easy enough to answer based on the data that exists. And while the answers to these particular questions probably won’t lead to any Nobel prizes, enabling scientists to query arcane corners of the literature quickly and easily would allow for more workflows like the one demonstrated by Sharpless, and thus be beneficial for scientific innovation.

What prevents this from happening today? Developing a suitable database of chemical data would entail scraping through tens of thousands of publications and patents and extracting the data contained therein, both structured (reaction conditions, yields, selectivities, etc.) and unstructured (the actual text of the papers). While efforts in this direction have been made (e.g. the Open Reaction Database for structured data), doing so for all the old journals that Sharpless and others know so well would be a sizable undertaking — but not insurmountable, contingent on IP issues being worked out.

What's next?

Eric taking over again.

In my eyes, the work of both Swanson and Mullainathan & Ludwig serve as massively important proofs of concept that computationally-augmented hypothesis generation is much more than sci-fi-inspired wishful thinking.

One ideal place to start might be a scientific philanthropy scraping together the resources to put together a minimum viable dataset in an area ripe for experimentation with the idea — say, organic chemistry — and getting some researchers to see just how much they can do with the basic tool.

If it works, you can scale the hell out of it. If it doesn't, we can iterate, learn, and hopefully try again. Building up resources and know-how in the area of computer-augmented hypothesis generation seems to be an area of significant promise that is obviously worth pursuing.

Thanks for reading:)

Of course, the chance always exists that performance disparities are coming from the different statistical procedures and not one method “discovering” variables that the other is not.

As a note: To ensure that the morphed faces looked like realistic faces — rather than images produced by following the gradient that have different predicted outcomes but no longer look like realistic faces — the authors use a GAN. If you want to know more about this, check out page 25 of the paper, Appendix C, or Figure V.